California needs biomass energy to meet its wildfire goals. Its projects keep going South

Arbor Energy is, essentially, a poster child of the kind of biomass energy project California keeps saying it wants.The state’s goal is to reduce wildfire risk on 1 million acres of wildlands every year, including by thinning overgrown forests, which is expected to generate roughly 10 million tons of wood waste annually. Arbor hopes to take that waste, blast it through a “vegetarian rocket engine” to produce energy, then sequester all of the carbon the process would generate underground.California has billed Arbor — and the handful of other similarly aimed projects it’s financed — as a win-win-win: wildfire mitigation, clean energy and carbon sequestration all in one.Yet, after Arbor initially won state financial backing for a pilot project in Placer County, the El Segundo-based company’s California ambitions fell through, like many biomass projects before it.Instead, it’s heading to Louisiana.California, biomass energy advocates say, has struggled to get past its distrust of the technology, given traditional biomass’ checkered past of clear-cutting forests and polluting poorer communities. Further, the state’s strict permitting requirements have given residents tremendous power to veto projects and created regulatory headaches.But many environmental groups argue it’s an example of California’s environmental and health protections actually working. If not done carefully, bioenergy projects run the risk of emitting carbon — not sequestering it — and polluting communities already grappling with some of the state’s dirtiest air. “When you look at biomass facilities across California — and we’ve done Public Records Act requests to look at emissions, violations and exceedances ... the reality is that we’re not in some kind of idealized pen-and-paper drawing of what the equipment does,” said Shaye Wolf, climate science director at the Center for Biological Diversity. “In the real world, there are just too many problems with failures and faults in the equipment.”There are simpler and safer uses for this wood waste, these critics say: fertilizer for agriculture, wood chips and mulch. It may not provide carbon-negative energy but comes with none of the risks of bioenergy projects, they say. The Center for Biological Diversity and others advocate for a “hands-off” approach to California’s forests and urge management of the wildfire crisis through home hardening and evacuation planning alone. But fire and ecology experts say more than a century of fire suppression have made that unrealistic.However, the sweeping forest-thinning projects these experts say are needed will cost billions, and so the state needs every source of funding it can get. “Our bottleneck right now is, how do we pay for treating a million acres a year?” said Deputy Chief John McCarthy of the California Department of Forestry and Fire Protection, who oversees the agency’s wood products and bioenergy program.In theory, the class of next-generation biomass energy proposals popping up across California could help fund this work.“California has an incredible opportunity,” said Arbor chief executive and co-founder Brad Hartwig. With the state’s leftover biomass from forest thinning, “we could make it basically the leader in carbon removal in the world.”A lot of wood with nowhere to goBiomass energy first took off in California in the 1980s after small pioneering plants at sawmills and food-processing facilities proved successful and the state’s utilities began offering favorable contracts for energy sources they deemed “renewable” — a category that included biomass. In the late ‘80s and early ‘90s, the state had more than 60 operating biomass plants, providing up to 9% of the state’s residential power. Researchers estimate the industry supported about 60,000 acres of forest treatment to reduce wildfire risk per year at the time. But biomass energy’s heyday was short-lived.In 1994, the California Public Utilities Commission shifted the state’s emphasis away from creating a renewable and diverse energy mix and toward simply buying the cheapest possible power.Biomass — an inherently more expensive endeavor — struggled. Many plants took buyouts to shut down early. Despite California’s repeated attempts to revitalize the industry, the number of biomass plants continued to dwindle.Today, only 23 biomass plants remain in operation, according to the industry advocate group California Biomass Energy Alliance. The state Energy Commission expects the number to continue declining because of aging infrastructure and a poor bioenergy market. California’s forest and wildfire leadership are trying to change that.In 2021, Gov. Gavin Newsom created a task force to address California’s growing wildfire crisis. After convening the state’s top wildfire and forest scientists, the task force quickly came to a daunting conclusion: The more than a century of fire suppression in California’s forests — especially in the Sierra Nevada — had dramatically increased their density, providing fires with ample fuel to explode into raging beasts.To solve it, the state needed to rapidly remove that extra biomass on hundreds of thousands, if not millions, of acres of wildlands every year through a combination of prescribed burns, rehabilitation of burned areas and mechanically thinning the forest.McCarthy estimated treating a single acre of land could cost $2,000 to $3,000. At a million acres a year, that’s $2 billion to $3 billion annually.“Where is that going to come from?” McCarthy said. “Grants — maybe $200 million … 10% of the whole thing. So, we need markets. We need some sort of way to pay for this stuff and in a nontraditional way.”McCarthy believes bioenergy is one of those ways — essentially, by selling the least valuable, borderline unusable vegetation from the forest floor. You can’t build a house with pine cones, needles and twigs, but you can power a bioenergy plant.However, while biomass energy has surged in Southern states such as Georgia, projects in California have struggled to get off the ground.In 2022, a bid by Chevron, Microsoft and the oil-drilling technology company Schlumberger to revive a traditional biomass plant near Fresno and affix carbon capture to it fell through after the U.S. Environmental Protection Agency requested the project withdraw its permit application. Environmental groups including the Center for Biological Diversity and residents in nearby Mendota opposed the project.This year, a sweeping effort supported by rural Northern California counties to process more than 1 million tons of biomass a year into wood pellets and ship them to European bioenergy plants (with no carbon capture involved) in effect died after facing pushback from watch groups that feared the project, led by Golden State Natural Resources, would harm forests, and environmental justice groups that worried processing facilities at the Port of Stockton would worsen the air quality in one of the state’s most polluted communities.Arbor believed its fate would be different. Bioenergy from the ground upBefore founding Arbor, Hartwig served in the California Air National Guard for six years and on a Marin County search and rescue team. He now recalls a common refrain on the job: “There is no rescue in fire. It’s all search,” Hartwig said. “It’s looking for bodies — not even bodies, it’s teeth and bones.”In 2022, he started Arbor, with the idea of taking a different approach to bioenergy than the biomass plants shuttering across California.To understand Arbor’s innovation, start with coal plants, which burn fossil fuels to heat up water and produce steam that turns a turbine to generate electricity. Traditional biomass plants work essentially the same but replace coal with vegetation as the fuel. Typically, the smoke from the vegetation burning is simply released into the air. Small detail of the 16,000-pound proof-of-concept system being tested by Arbor that will burn biomass, capture carbon dioxide and generate electricity. (Myung J. Chun/Los Angeles Times) Arbor’s solution is more like a tree-powered rocket engine.The company can utilize virtually any form of biomass, from wood to sticks to pine needles and brush. Arbor heats it to extreme temperatures and deprives it of enough oxygen to make the biomass fully combust. The organic waste separates into a flammable gas — made of carbon monoxide, carbon dioxide, methane and hydrogen — and a small amount of solid waste.The machine then combusts the gas at extreme temperatures and pressures, which then accelerates a turbine at much higher rates than typical biomass plants. The resulting carbon dioxide exhaust is then sequestered underground. Arbor portrays its solution as a flexible, carbon-negative and clean device: It can operate anywhere with a hookup for carbon sequestration. Multiple units can work together for extra power. All of the carbon in the trees and twigs the machine ingests ends up in the ground — not back in the air.But biomass watchdogs warn previous attempts at technology like Arbor’s have fallen short.This biomass process creates a dry, flaky ash mainly composed of minerals — essentially everything in the original biomass that wasn’t “bio” — that can include heavy metals that the dead plants sucked up from the air or soil. If agricultural or construction waste is used, it can include nasty chemicals from wood treatments and pesticides.Arbor plans — at least initially — on using woody biomass directly from the forest, which typically contains less of these dangerous ash chemicals.Turning wood waste into gas also generates a thick, black tar composed of volatile organic compounds — which are also common contaminants following wildfires. The company says its gasification process uses high enough temperatures to break down the troublesome tar, but researchers say tar is an inevitable byproduct of this process. Grant Niccum, left, Arbor lead systems engineer and Kevin Saboda, systems engineer, at the company‘s test site in San Bernardino. Biomass is fed into this component and then compressed to 100 times atmospheric pressure and burned to create a synthetic gas. (Myung J. Chun / Los Angeles Times) Watchdogs also caution that the math to determine whether bioenergy projects sequester or release carbon is complicated and finicky.“Biomass is tricky, and there’s a million exceptions to every rule that need to be accounted for,” said Zeke Hausfather, climate research lead with Frontier Climate, which vets carbon capture projects such as Arbor’s and connects them with companies interested in buying carbon credits. “There are examples where we have found a project that actually works on the carbon accounting math, but we didn’t want to do it because it was touching Canadian boreal forest that’s old-growth forest.”Frontier Climate, along with the company Isometric, audits Arbor’s technology and operations. However, critics note that because both companies ultimately support the sale of carbon credits, their assessments may be biased.At worst, biomass projects can decimate forests and release their stored carbon into the atmosphere. Arbor hopes, instead, to be a best-case scenario: improving — or at least maintaining — forest health and stuffing carbon underground.When it all goes SouthArbor had initially planned to build a proof of concept in Placer County. To do it, Arbor won $2 million through McCarthy’s Cal Fire program and $500,000 through a state Department of Conservation program in 2023.But as California fell into a deficit in 2023, state funding dried up. So Arbor turned to private investors. In September 2024, Arbor reached an agreement with Microsoft in which the technology company would buy carbon credits backed by Arbor’s sequestration. In July of this year, the company announced a $41-million deal (well over 15 times the funding it ever received from California) with Frontier Climate, whose carbon credit buyers include Google, the online payment company Stripe and Meta, which owns Instagram and Facebook.To fulfill the credits, it would build its first commercial facility near Lake Charles, La., in part powering nearby data centers.“We were very excited about Arbor,” McCarthy said. “They pretty much walked away from their grant and said they’re not going to do this in California. … We were disappointed in that.”But for Arbor, relying on the state was no longer feasible.“We can’t rely on California for the money to develop the technology and deploy the initial systems,” said Hartwig, standing in Arbor’s plant-covered El Segundo office. “For a lot of reasons, it makes sense to go test the machine, improve the technology in the market elsewhere before we actually get to do deployments in California, which is a much more difficult permitting and regulatory environment.” Rigger Arturo Hernandez, left, and systems engineer Kevin Saboda secure Arbor’s proof-of-concept system in the company’s San Bernardino test site after its journey from Arbor’s headquarters in El Segundo. The steel frame was welded in Texas while the valves, tubing and other hardware were installed in El Segundo. (Myung J. Chun/Los Angeles Times) It’s not the first next-generation biomass company based in California to build elsewhere. San Francisco-based Charm Industrial, whose technology doesn’t involve energy generation, began its sequestration efforts in the Midwest and plans to expand into Louisiana.The American South has less stringent logging and environmental regulations, which has led biomass energy projects to flock to the area: In 2024, about 2.3% of the South’s energy came from woody biomass — up from 2% in 2010, according to the U.S. Energy Information Administration. Meanwhile, that number on the West Coast was only 1.2%, continuing on its slow decline. And, unlike in the West, companies aiming to create wood pellets to ship abroad have proliferated in the South. In 2024, the U.S. produced more than 10.7 million tons of biomass pellets; 82% of which was exported. That’s up from virtually zero in 2000. The vast majority of the biomass pellets produced last year — 84% — was from the South. Watchdogs warn that this lack of guardrails has allowed the biomass industry to harm the South’s forests, pollute poor communities living near biomass facilities and fall short of its climate claims.Over the last five years, Drax — a company that harvests and exports wood pellets and was working with Golden State Natural Resources — has had to pay Louisiana and Mississippi a combined $5 million for violating air pollution laws. Residents living next to biomass plants, like Drax’s, say the operations have worsened asthma and routinely leave a film of dust on their cars.But operating a traditional biomass facility or shipping wood pellets to Europe wasn’t Arbor’s founding goal — albeit powering data centers in the American South wasn’t exactly either.Hartwig, who grew up in the Golden State, hopes Arbor’s technology can someday return to California to help finance the solution for the wildfire crisis he spent so many years facing head-on.“We’ve got an interest in Arkansas, in Texas, all the way up to Minnesota,” Hartwig said. “Eventually, we’d like to come back to California.”

California needs to burn vegetation both for wildfire mitigation and to generate power. So why do biomass energy projects keep leaving the state?

Arbor Energy is, essentially, a poster child of the kind of biomass energy project California keeps saying it wants.

The state’s goal is to reduce wildfire risk on 1 million acres of wildlands every year, including by thinning overgrown forests, which is expected to generate roughly 10 million tons of wood waste annually. Arbor hopes to take that waste, blast it through a “vegetarian rocket engine” to produce energy, then sequester all of the carbon the process would generate underground.

California has billed Arbor — and the handful of other similarly aimed projects it’s financed — as a win-win-win: wildfire mitigation, clean energy and carbon sequestration all in one.

Yet, after Arbor initially won state financial backing for a pilot project in Placer County, the El Segundo-based company’s California ambitions fell through, like many biomass projects before it.

Instead, it’s heading to Louisiana.

California, biomass energy advocates say, has struggled to get past its distrust of the technology, given traditional biomass’ checkered past of clear-cutting forests and polluting poorer communities. Further, the state’s strict permitting requirements have given residents tremendous power to veto projects and created regulatory headaches.

But many environmental groups argue it’s an example of California’s environmental and health protections actually working. If not done carefully, bioenergy projects run the risk of emitting carbon — not sequestering it — and polluting communities already grappling with some of the state’s dirtiest air.

“When you look at biomass facilities across California — and we’ve done Public Records Act requests to look at emissions, violations and exceedances ... the reality is that we’re not in some kind of idealized pen-and-paper drawing of what the equipment does,” said Shaye Wolf, climate science director at the Center for Biological Diversity. “In the real world, there are just too many problems with failures and faults in the equipment.”

There are simpler and safer uses for this wood waste, these critics say: fertilizer for agriculture, wood chips and mulch. It may not provide carbon-negative energy but comes with none of the risks of bioenergy projects, they say.

The Center for Biological Diversity and others advocate for a “hands-off” approach to California’s forests and urge management of the wildfire crisis through home hardening and evacuation planning alone. But fire and ecology experts say more than a century of fire suppression have made that unrealistic.

However, the sweeping forest-thinning projects these experts say are needed will cost billions, and so the state needs every source of funding it can get. “Our bottleneck right now is, how do we pay for treating a million acres a year?” said Deputy Chief John McCarthy of the California Department of Forestry and Fire Protection, who oversees the agency’s wood products and bioenergy program.

In theory, the class of next-generation biomass energy proposals popping up across California could help fund this work.

“California has an incredible opportunity,” said Arbor chief executive and co-founder Brad Hartwig. With the state’s leftover biomass from forest thinning, “we could make it basically the leader in carbon removal in the world.”

A lot of wood with nowhere to go

Biomass energy first took off in California in the 1980s after small pioneering plants at sawmills and food-processing facilities proved successful and the state’s utilities began offering favorable contracts for energy sources they deemed “renewable” — a category that included biomass.

In the late ‘80s and early ‘90s, the state had more than 60 operating biomass plants, providing up to 9% of the state’s residential power. Researchers estimate the industry supported about 60,000 acres of forest treatment to reduce wildfire risk per year at the time. But biomass energy’s heyday was short-lived.

In 1994, the California Public Utilities Commission shifted the state’s emphasis away from creating a renewable and diverse energy mix and toward simply buying the cheapest possible power.

Biomass — an inherently more expensive endeavor — struggled. Many plants took buyouts to shut down early. Despite California’s repeated attempts to revitalize the industry, the number of biomass plants continued to dwindle.

Today, only 23 biomass plants remain in operation, according to the industry advocate group California Biomass Energy Alliance. The state Energy Commission expects the number to continue declining because of aging infrastructure and a poor bioenergy market. California’s forest and wildfire leadership are trying to change that.

In 2021, Gov. Gavin Newsom created a task force to address California’s growing wildfire crisis. After convening the state’s top wildfire and forest scientists, the task force quickly came to a daunting conclusion: The more than a century of fire suppression in California’s forests — especially in the Sierra Nevada — had dramatically increased their density, providing fires with ample fuel to explode into raging beasts.

To solve it, the state needed to rapidly remove that extra biomass on hundreds of thousands, if not millions, of acres of wildlands every year through a combination of prescribed burns, rehabilitation of burned areas and mechanically thinning the forest.

McCarthy estimated treating a single acre of land could cost $2,000 to $3,000. At a million acres a year, that’s $2 billion to $3 billion annually.

“Where is that going to come from?” McCarthy said. “Grants — maybe $200 million … 10% of the whole thing. So, we need markets. We need some sort of way to pay for this stuff and in a nontraditional way.”

McCarthy believes bioenergy is one of those ways — essentially, by selling the least valuable, borderline unusable vegetation from the forest floor. You can’t build a house with pine cones, needles and twigs, but you can power a bioenergy plant.

However, while biomass energy has surged in Southern states such as Georgia, projects in California have struggled to get off the ground.

In 2022, a bid by Chevron, Microsoft and the oil-drilling technology company Schlumberger to revive a traditional biomass plant near Fresno and affix carbon capture to it fell through after the U.S. Environmental Protection Agency requested the project withdraw its permit application. Environmental groups including the Center for Biological Diversity and residents in nearby Mendota opposed the project.

This year, a sweeping effort supported by rural Northern California counties to process more than 1 million tons of biomass a year into wood pellets and ship them to European bioenergy plants (with no carbon capture involved) in effect died after facing pushback from watch groups that feared the project, led by Golden State Natural Resources, would harm forests, and environmental justice groups that worried processing facilities at the Port of Stockton would worsen the air quality in one of the state’s most polluted communities.

Arbor believed its fate would be different.

Bioenergy from the ground up

Before founding Arbor, Hartwig served in the California Air National Guard for six years and on a Marin County search and rescue team. He now recalls a common refrain on the job: “There is no rescue in fire. It’s all search,” Hartwig said. “It’s looking for bodies — not even bodies, it’s teeth and bones.”

In 2022, he started Arbor, with the idea of taking a different approach to bioenergy than the biomass plants shuttering across California.

To understand Arbor’s innovation, start with coal plants, which burn fossil fuels to heat up water and produce steam that turns a turbine to generate electricity. Traditional biomass plants work essentially the same but replace coal with vegetation as the fuel. Typically, the smoke from the vegetation burning is simply released into the air.

Small detail of the 16,000-pound proof-of-concept system being tested by Arbor that will burn biomass, capture carbon dioxide and generate electricity.

(Myung J. Chun/Los Angeles Times)

Arbor’s solution is more like a tree-powered rocket engine.

The company can utilize virtually any form of biomass, from wood to sticks to pine needles and brush. Arbor heats it to extreme temperatures and deprives it of enough oxygen to make the biomass fully combust. The organic waste separates into a flammable gas — made of carbon monoxide, carbon dioxide, methane and hydrogen — and a small amount of solid waste.

The machine then combusts the gas at extreme temperatures and pressures, which then accelerates a turbine at much higher rates than typical biomass plants. The resulting carbon dioxide exhaust is then sequestered underground.

Arbor portrays its solution as a flexible, carbon-negative and clean device: It can operate anywhere with a hookup for carbon sequestration. Multiple units can work together for extra power. All of the carbon in the trees and twigs the machine ingests ends up in the ground — not back in the air.

But biomass watchdogs warn previous attempts at technology like Arbor’s have fallen short.

This biomass process creates a dry, flaky ash mainly composed of minerals — essentially everything in the original biomass that wasn’t “bio” — that can include heavy metals that the dead plants sucked up from the air or soil. If agricultural or construction waste is used, it can include nasty chemicals from wood treatments and pesticides.

Arbor plans — at least initially — on using woody biomass directly from the forest, which typically contains less of these dangerous ash chemicals.

Turning wood waste into gas also generates a thick, black tar composed of volatile organic compounds — which are also common contaminants following wildfires. The company says its gasification process uses high enough temperatures to break down the troublesome tar, but researchers say tar is an inevitable byproduct of this process.

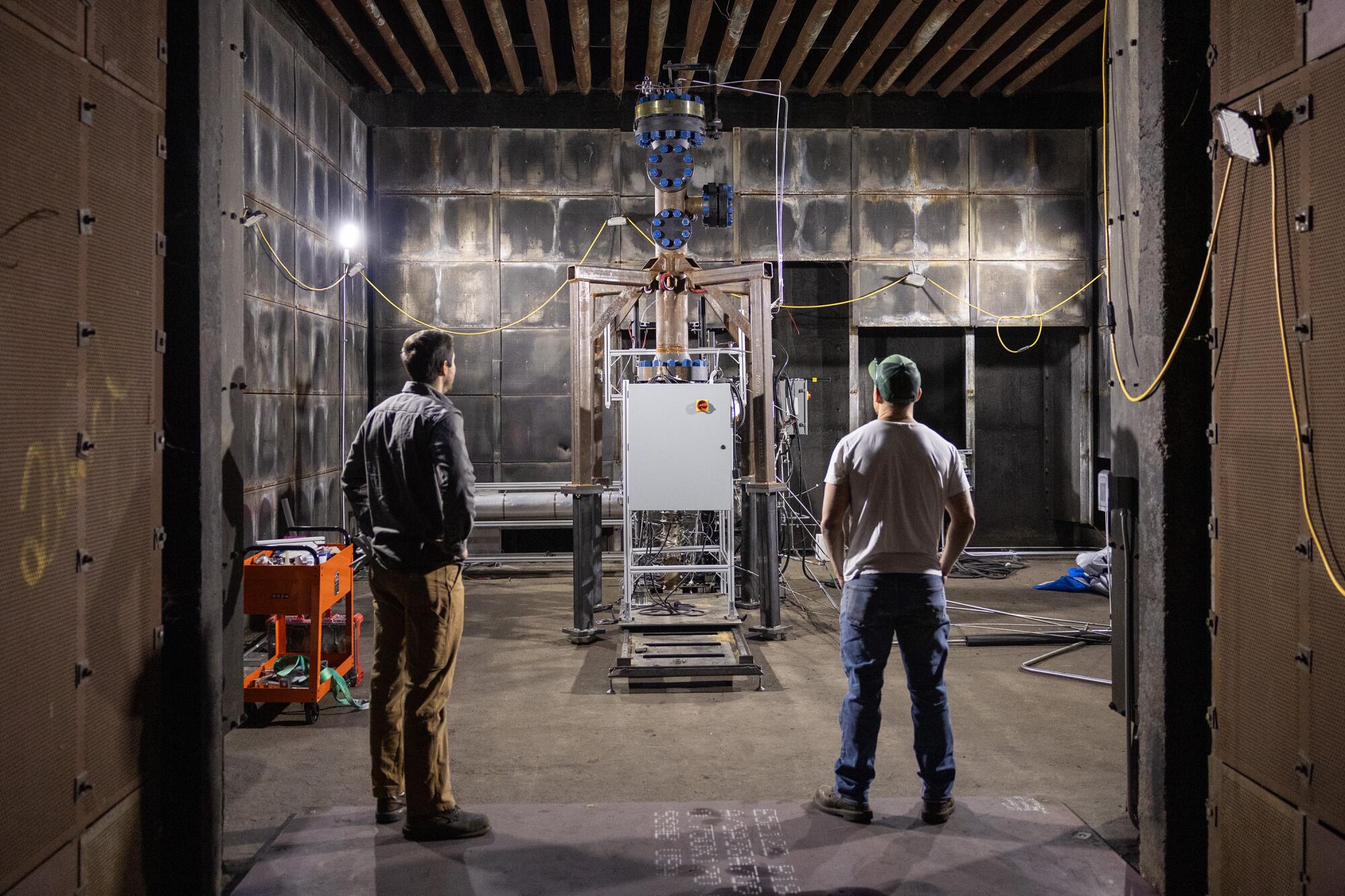

Grant Niccum, left, Arbor lead systems engineer and Kevin Saboda, systems engineer, at the company‘s test site in San Bernardino. Biomass is fed into this component and then compressed to 100 times atmospheric pressure and burned to create a synthetic gas.

(Myung J. Chun / Los Angeles Times)

Watchdogs also caution that the math to determine whether bioenergy projects sequester or release carbon is complicated and finicky.

“Biomass is tricky, and there’s a million exceptions to every rule that need to be accounted for,” said Zeke Hausfather, climate research lead with Frontier Climate, which vets carbon capture projects such as Arbor’s and connects them with companies interested in buying carbon credits. “There are examples where we have found a project that actually works on the carbon accounting math, but we didn’t want to do it because it was touching Canadian boreal forest that’s old-growth forest.”

Frontier Climate, along with the company Isometric, audits Arbor’s technology and operations. However, critics note that because both companies ultimately support the sale of carbon credits, their assessments may be biased.

At worst, biomass projects can decimate forests and release their stored carbon into the atmosphere. Arbor hopes, instead, to be a best-case scenario: improving — or at least maintaining — forest health and stuffing carbon underground.

When it all goes South

Arbor had initially planned to build a proof of concept in Placer County. To do it, Arbor won $2 million through McCarthy’s Cal Fire program and $500,000 through a state Department of Conservation program in 2023.

But as California fell into a deficit in 2023, state funding dried up.

So Arbor turned to private investors. In September 2024, Arbor reached an agreement with Microsoft in which the technology company would buy carbon credits backed by Arbor’s sequestration. In July of this year, the company announced a $41-million deal (well over 15 times the funding it ever received from California) with Frontier Climate, whose carbon credit buyers include Google, the online payment company Stripe and Meta, which owns Instagram and Facebook.

To fulfill the credits, it would build its first commercial facility near Lake Charles, La., in part powering nearby data centers.

“We were very excited about Arbor,” McCarthy said. “They pretty much walked away from their grant and said they’re not going to do this in California. … We were disappointed in that.”

But for Arbor, relying on the state was no longer feasible.

“We can’t rely on California for the money to develop the technology and deploy the initial systems,” said Hartwig, standing in Arbor’s plant-covered El Segundo office. “For a lot of reasons, it makes sense to go test the machine, improve the technology in the market elsewhere before we actually get to do deployments in California, which is a much more difficult permitting and regulatory environment.”

Rigger Arturo Hernandez, left, and systems engineer Kevin Saboda secure Arbor’s proof-of-concept system in the company’s San Bernardino test site after its journey from Arbor’s headquarters in El Segundo. The steel frame was welded in Texas while the valves, tubing and other hardware were installed in El Segundo.

(Myung J. Chun/Los Angeles Times)

It’s not the first next-generation biomass company based in California to build elsewhere. San Francisco-based Charm Industrial, whose technology doesn’t involve energy generation, began its sequestration efforts in the Midwest and plans to expand into Louisiana.

The American South has less stringent logging and environmental regulations, which has led biomass energy projects to flock to the area: In 2024, about 2.3% of the South’s energy came from woody biomass — up from 2% in 2010, according to the U.S. Energy Information Administration. Meanwhile, that number on the West Coast was only 1.2%, continuing on its slow decline.

And, unlike in the West, companies aiming to create wood pellets to ship abroad have proliferated in the South. In 2024, the U.S. produced more than 10.7 million tons of biomass pellets; 82% of which was exported. That’s up from virtually zero in 2000. The vast majority of the biomass pellets produced last year — 84% — was from the South.

Watchdogs warn that this lack of guardrails has allowed the biomass industry to harm the South’s forests, pollute poor communities living near biomass facilities and fall short of its climate claims.

Over the last five years, Drax — a company that harvests and exports wood pellets and was working with Golden State Natural Resources — has had to pay Louisiana and Mississippi a combined $5 million for violating air pollution laws. Residents living next to biomass plants, like Drax’s, say the operations have worsened asthma and routinely leave a film of dust on their cars.

But operating a traditional biomass facility or shipping wood pellets to Europe wasn’t Arbor’s founding goal — albeit powering data centers in the American South wasn’t exactly either.

Hartwig, who grew up in the Golden State, hopes Arbor’s technology can someday return to California to help finance the solution for the wildfire crisis he spent so many years facing head-on.

“We’ve got an interest in Arkansas, in Texas, all the way up to Minnesota,” Hartwig said. “Eventually, we’d like to come back to California.”